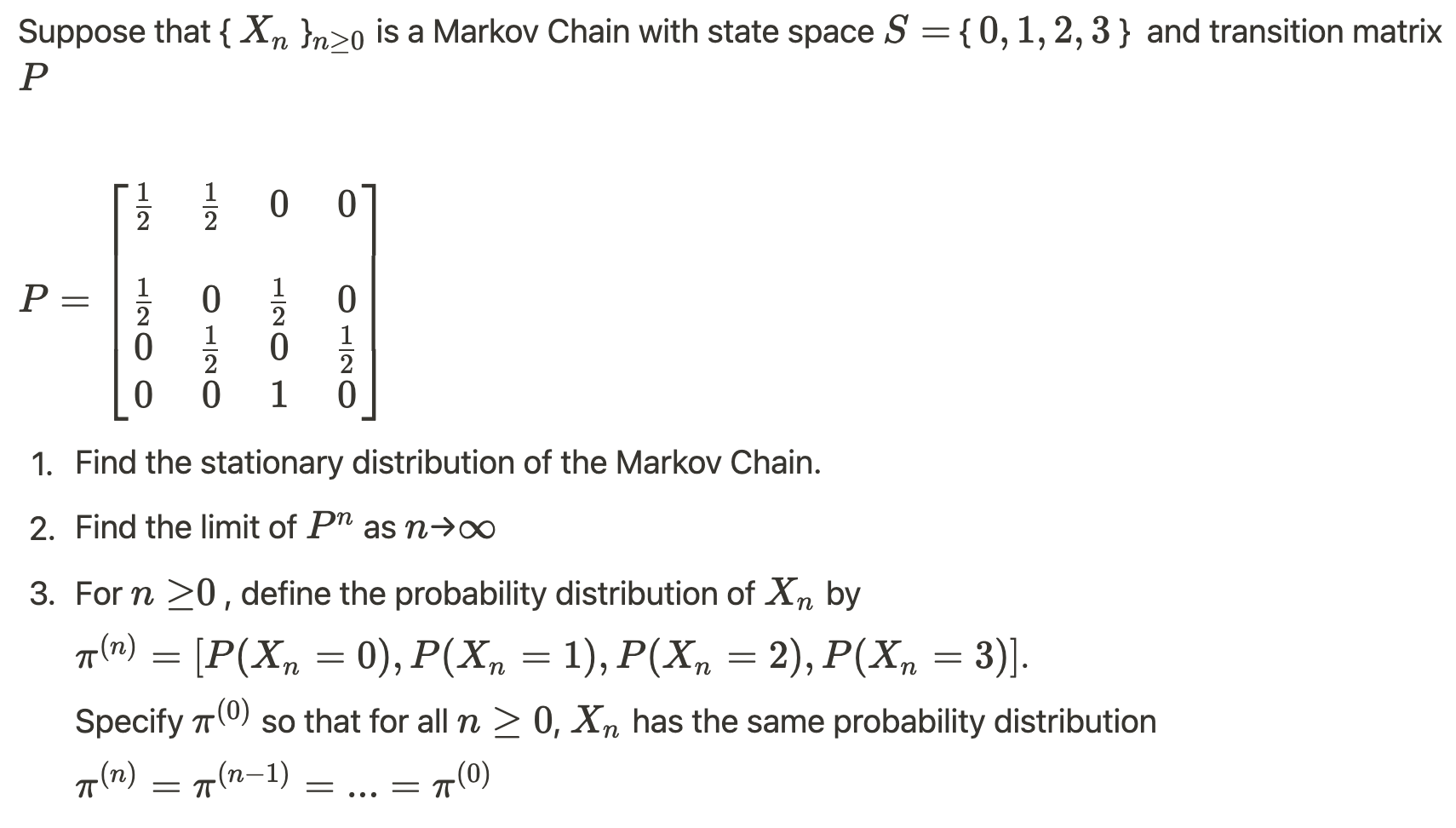

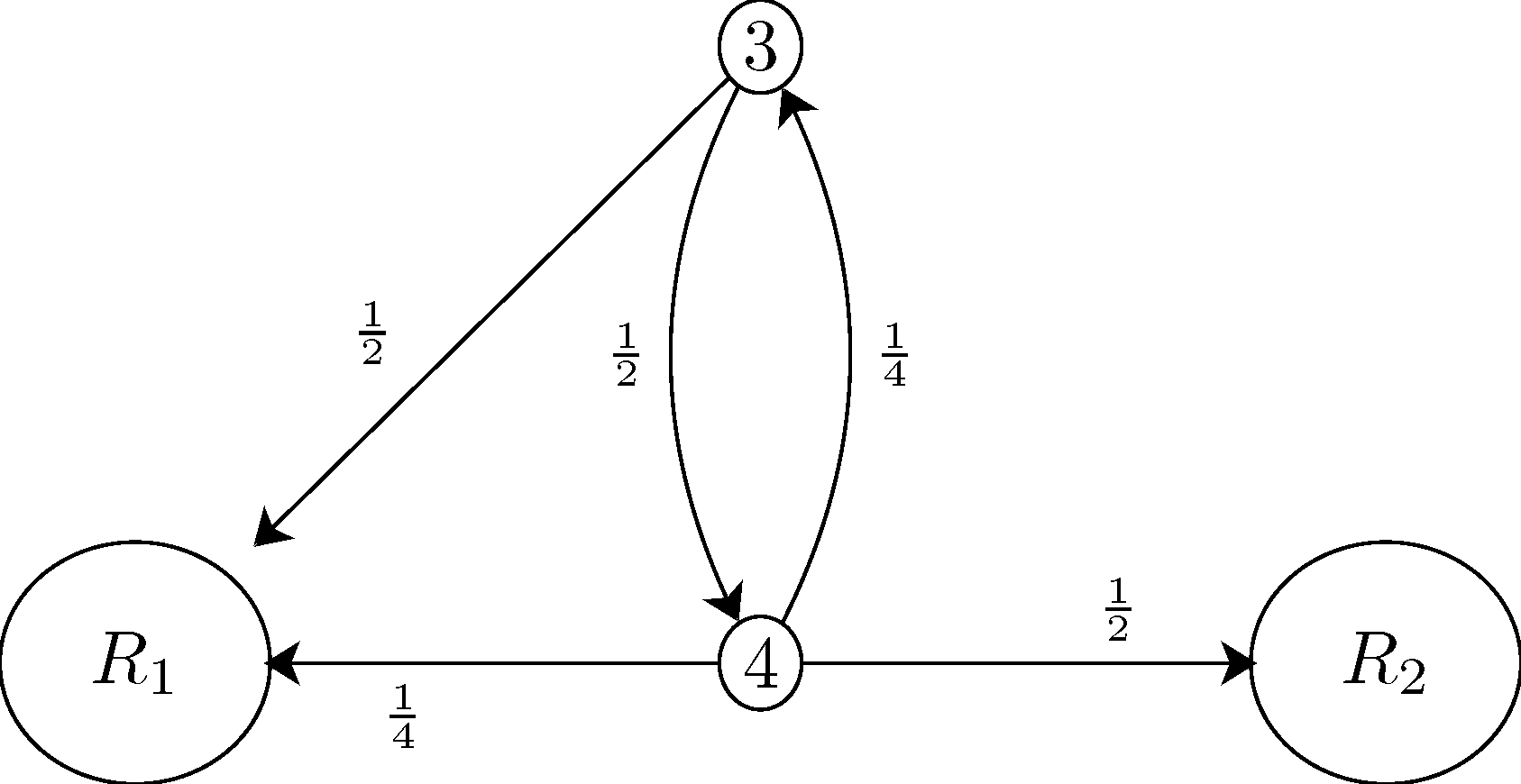

Markov Chains Contraction Approach , Lecture Notes - Mathematics | Study notes Operational Research | Docsity

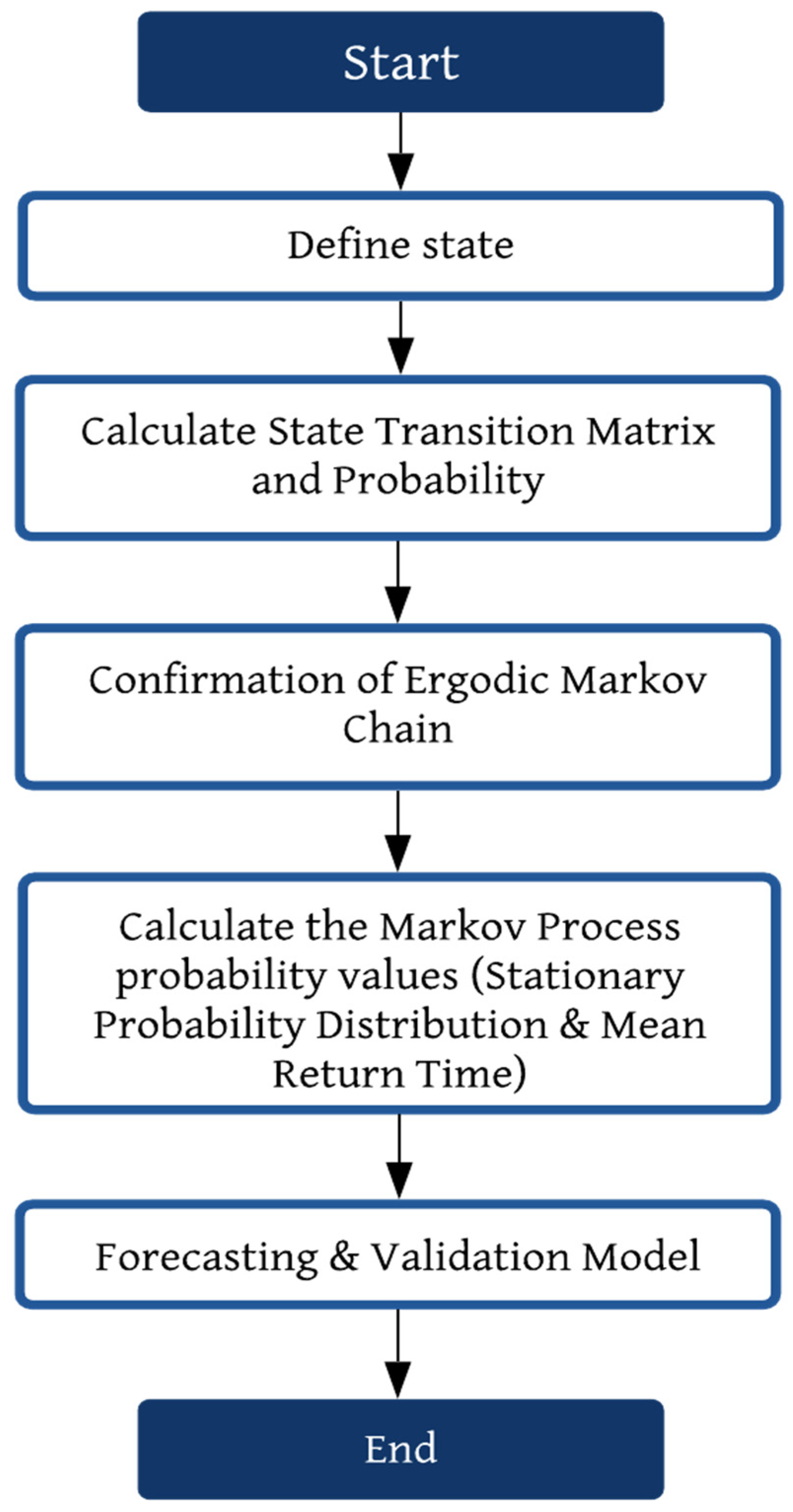

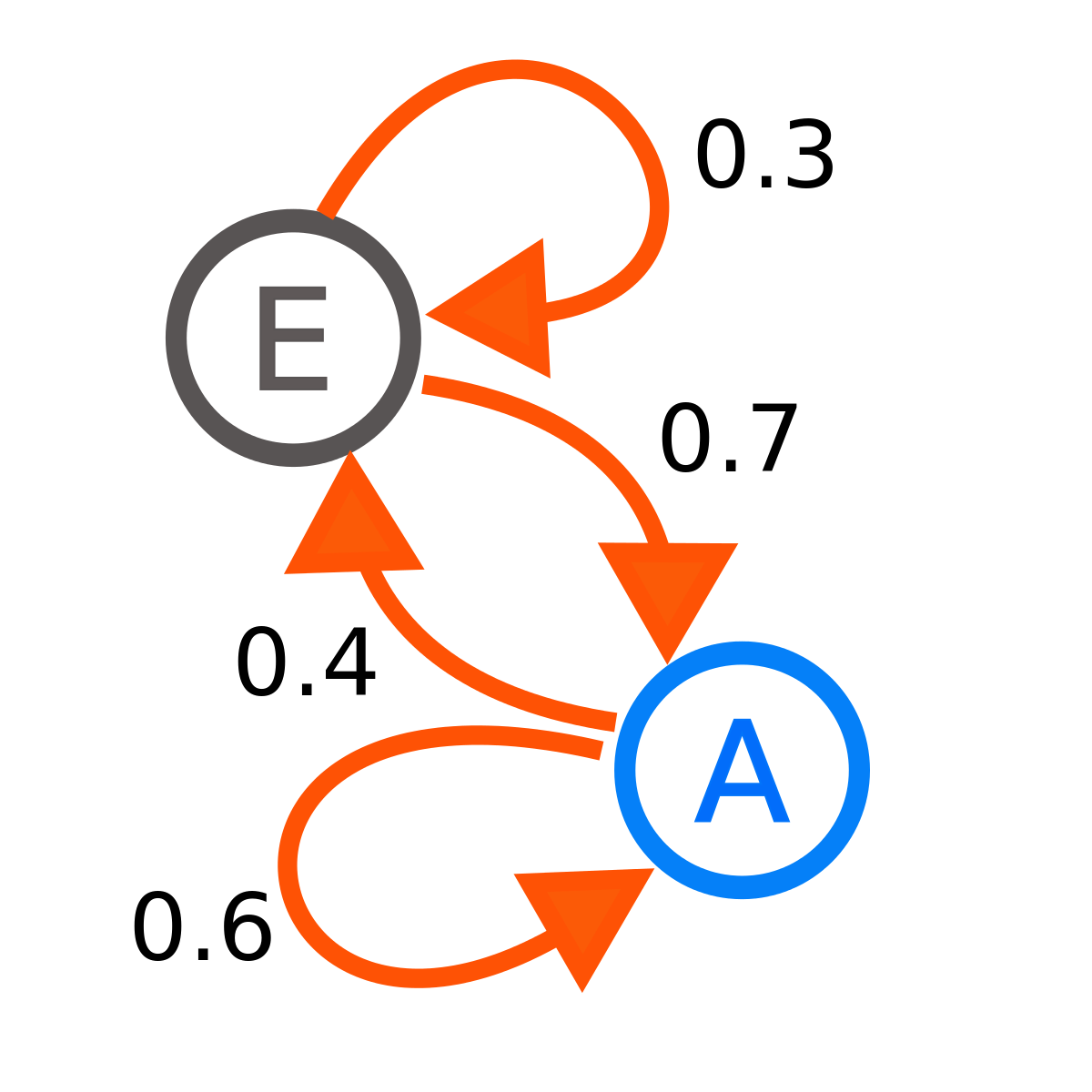

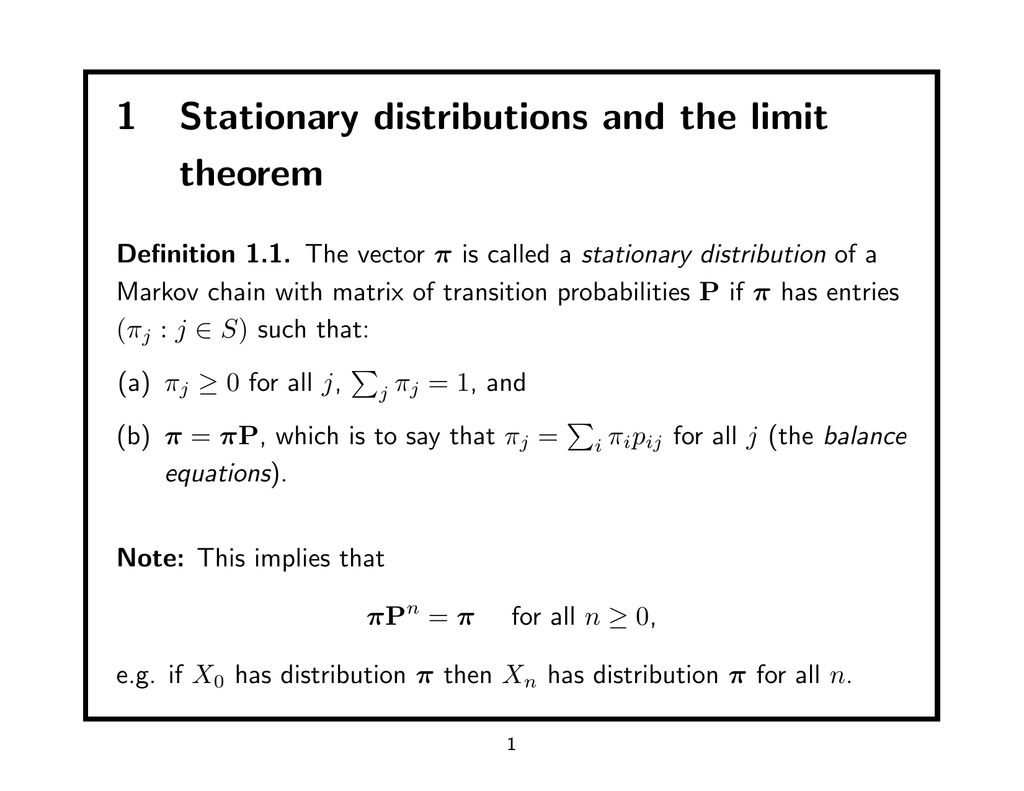

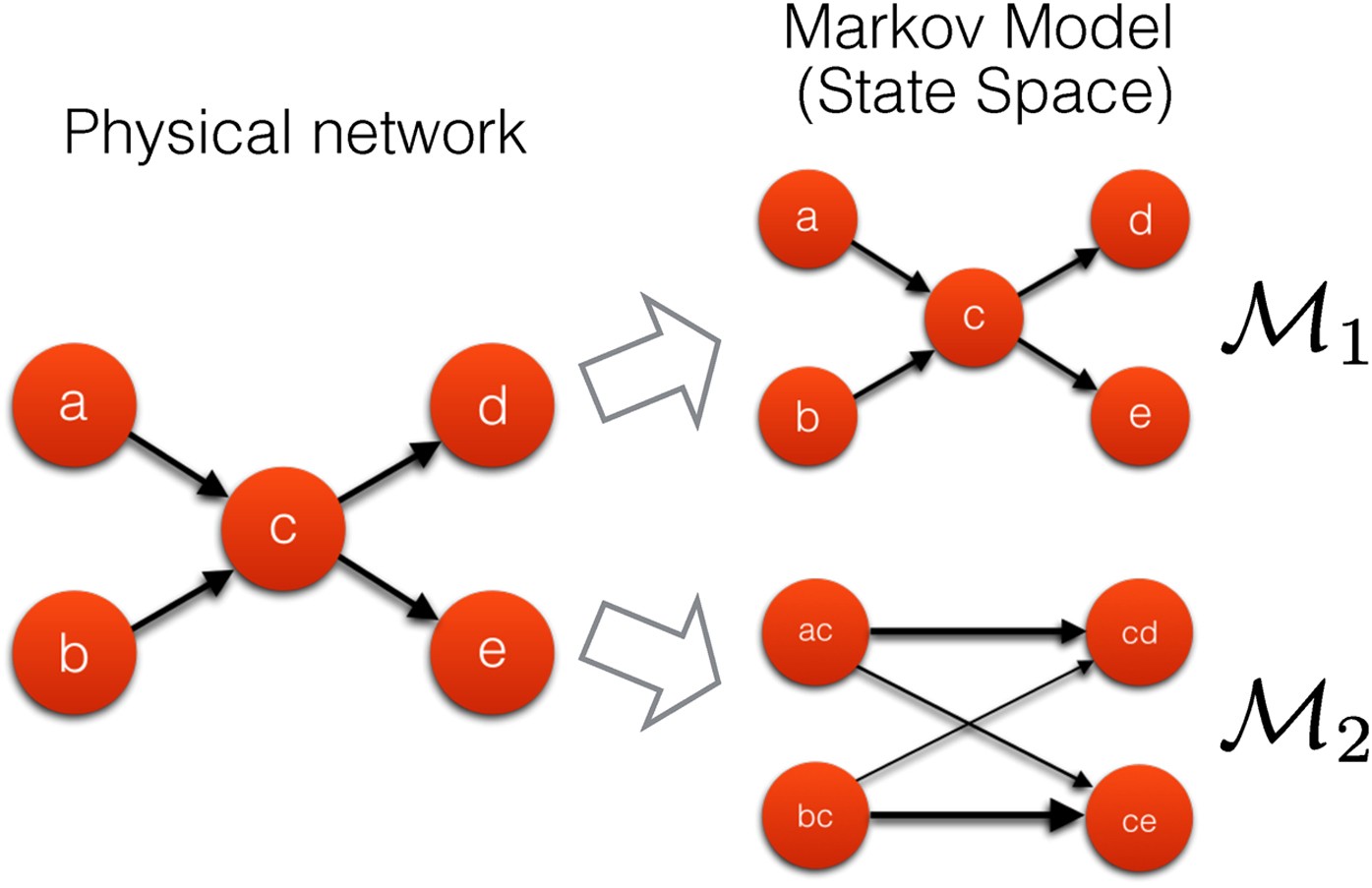

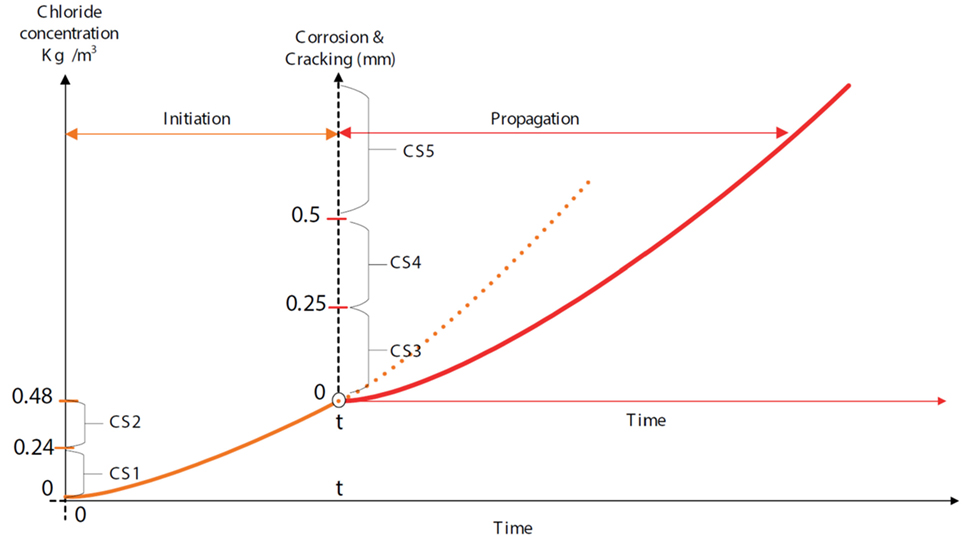

Frontiers | Improving the Estimation of Markov Transition Probabilities Using Mechanistic-Empirical Models

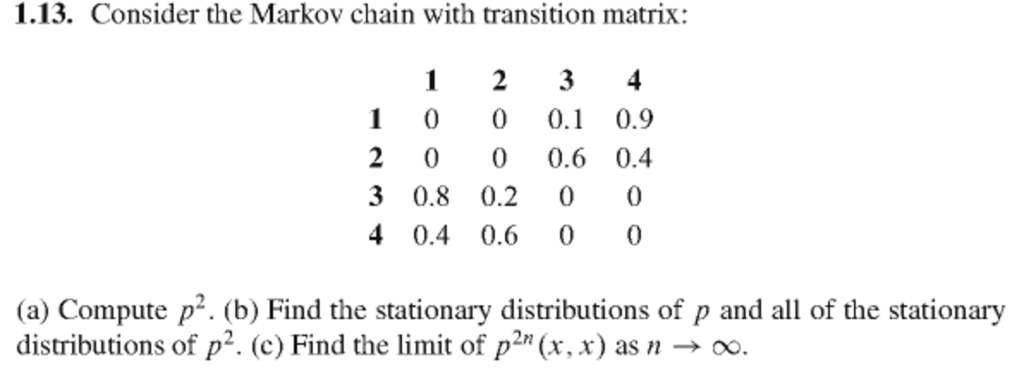

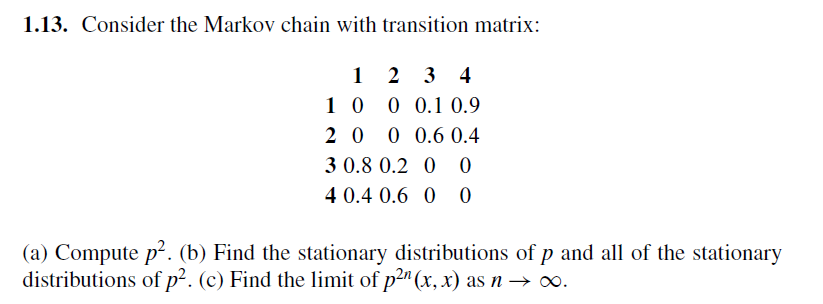

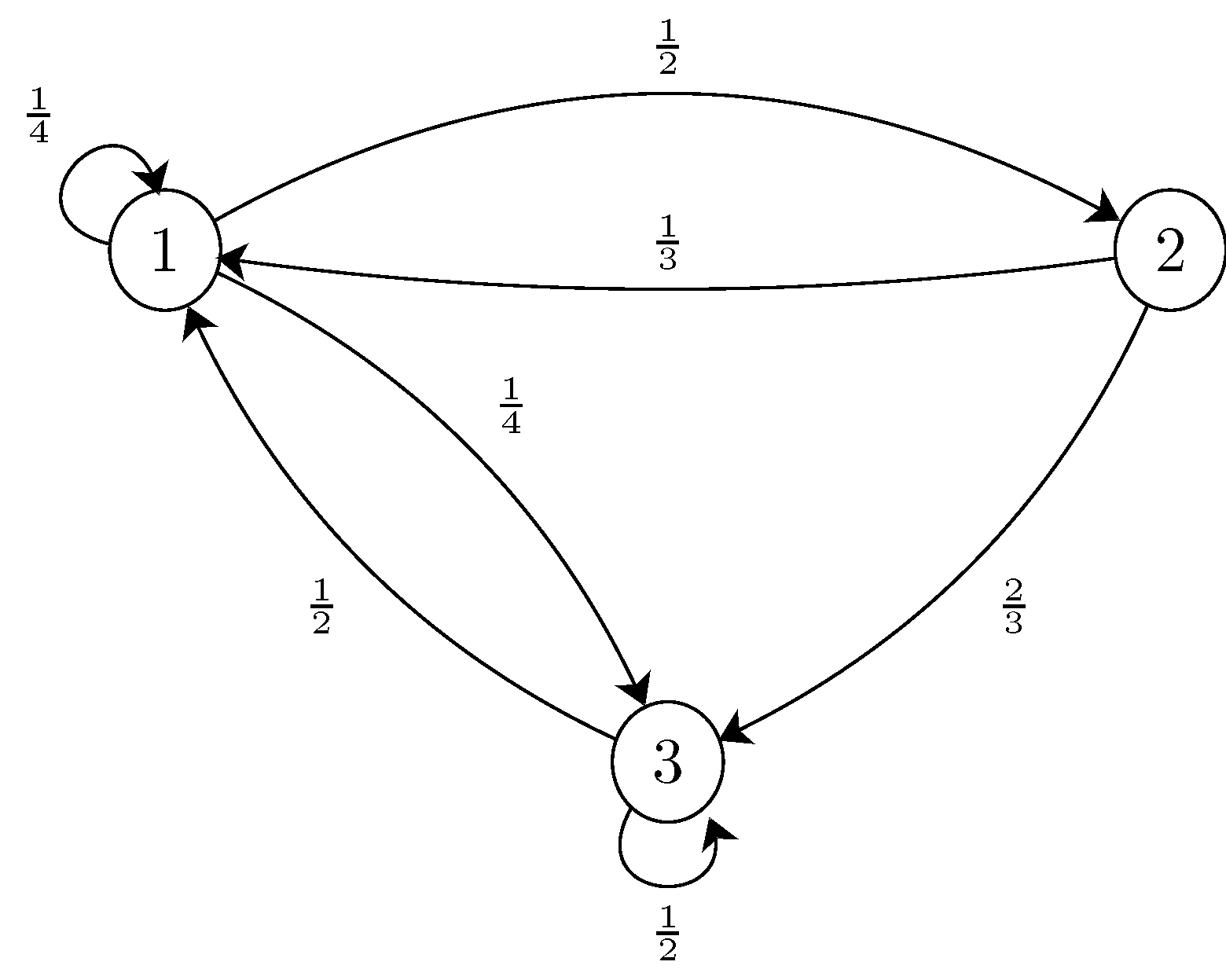

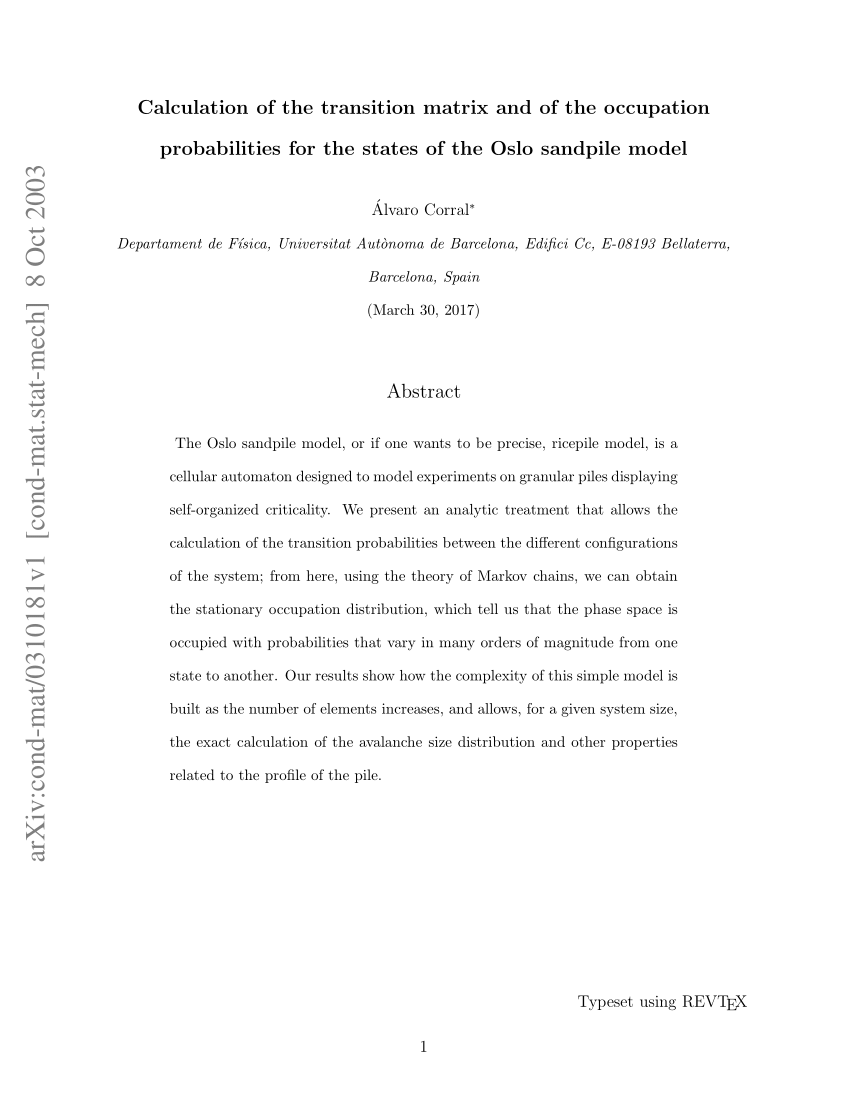

PDF) Calculation of the transition matrix and of the occupation probabilities for the states of the Oslo sandpile model